If you're interested in running DeepSeek models locally on your Mac, you're in the right place. DeepSeek is a powerful open-source language model that allows you to perform tasks like text generation and data analysis, all while keeping everything on your own machine. This setup ensures faster responses and full control over your data—no need for cloud-based services.

Running DeepSeek locally gives you the benefits of enhanced privacy, reduced latency, and cost savings. Whether you're a developer, researcher, or AI enthusiast, running it on your Mac allows you to fully leverage its capabilities without relying on external servers.

In this guide, we'll walk you through the entire process: from installation to advanced configuration. By the end, you'll know exactly how to run DeepSeek locally on your Mac and maximize its potential.

What is DeepSeek?

DeepSeek is an advanced language model designed to understand and generate human-like text. It uses deep learning techniques to process input and produce coherent, context-aware responses. With applications ranging from creative writing to problem-solving, DeepSeek is a versatile tool for a variety of tasks.

What sets DeepSeek apart is its ability to be run locally on your Mac. This allows you to perform tasks without relying on cloud services, offering greater control over both performance and privacy. Whether you're looking to automate text generation or analyze large datasets, DeepSeek provides a powerful solution directly on your machine.

Why Run DeepSeek Locally Instead of Using Cloud Services?

Running DeepSeek locally on your Mac offers several advantages over using cloud services. First, it enhances privacy by keeping your data within your own system, reducing the risks of sending sensitive information to external servers.

It also improves speed. Local models operate without the need for an internet connection, which means you’ll experience faster responses with no latency delays from cloud processing.

Additionally, running DeepSeek on your Mac helps you save on costs. Cloud services often come with recurring fees, especially for heavy usage, while running the model locally eliminates these ongoing expenses.

Finally, customization is easier when the model is on your machine. You have full control over its setup and performance, allowing for fine-tuning based on your specific needs.

System Requirements for Running DeepSeek Models on Mac

Before installing DeepSeek, it's important to ensure that your Mac meets the necessary requirements for smooth operation.

Hardware Requirements

- Operating System: macOS 10.15 (Catalina) or later.

- Processor: Intel or Apple Silicon (M1/M2) chip. The more powerful your processor, the better the performance.

- RAM: At least 8GB of RAM is recommended. For smoother operation, 16GB or more is ideal, especially if you're working with larger models.

- Storage: You’ll need a minimum of 10GB of free space for DeepSeek models and data. More may be required depending on your use case.

Software Requirements

- Python: Ensure Python 3.8 or later is installed.

- Homebrew: A package manager for easy software installation on macOS.

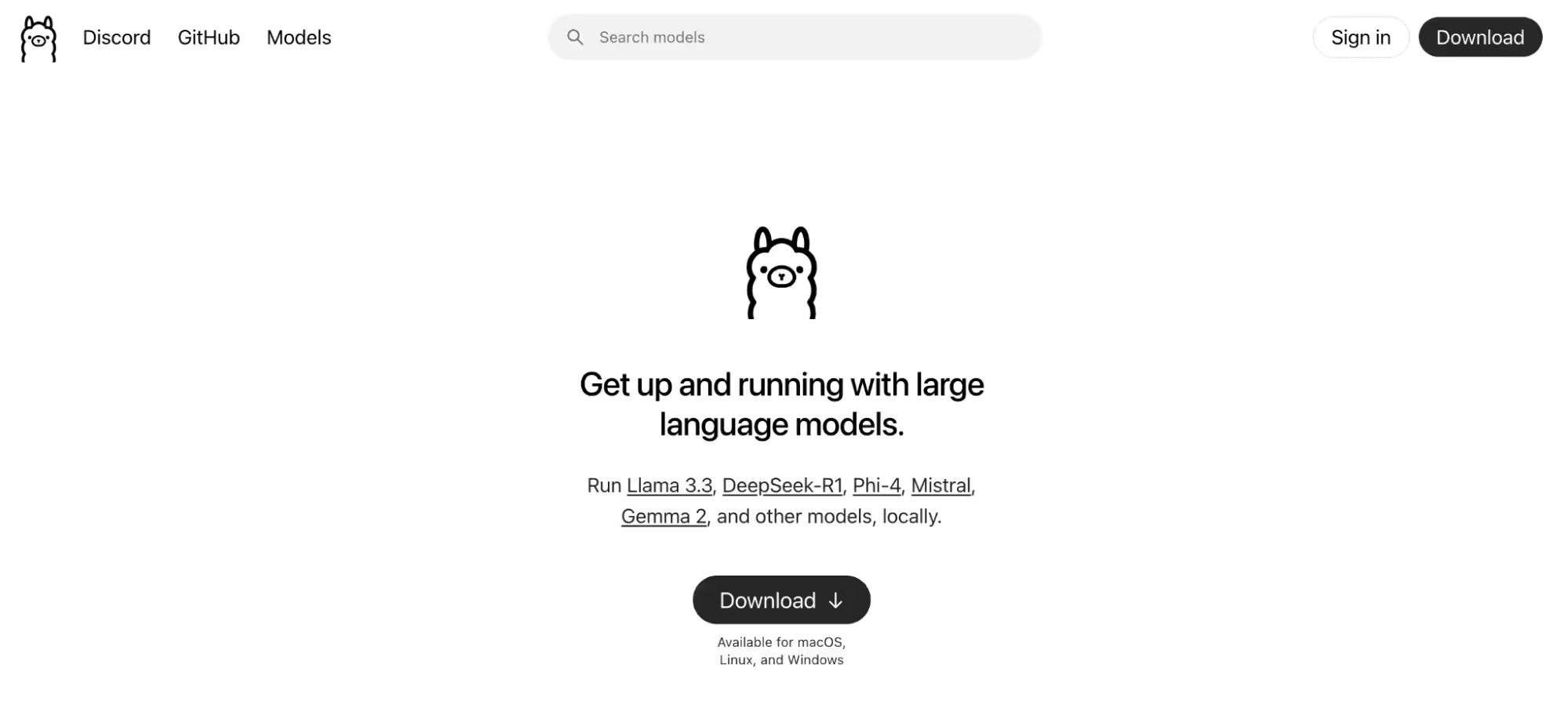

- Ollama: A framework to run AI models locally.

These requirements will ensure that DeepSeek runs smoothly on your Mac.

Installation Method for DeepSeek on Mac:

Using OllamaSetting up DeepSeek on your Mac using Ollama is a straightforward process that allows you to run powerful AI models locally. Whether you're a command-line user or a beginner, Ollama simplifies the installation and operation of DeepSeek, making it accessible for everyone. Here’s how to get started:

Step 1: Download Ollama

To begin, you need to download Ollama:

1. Visit the Ollama official website

Open your browser and go to the Ollama website.

2. Locate the macOS download link

Find the macOS download link on the website and click it to begin downloading the installer.

3. Save the installer

Make sure to save the downloaded installer in an easily accessible location, such as your Downloads folder.

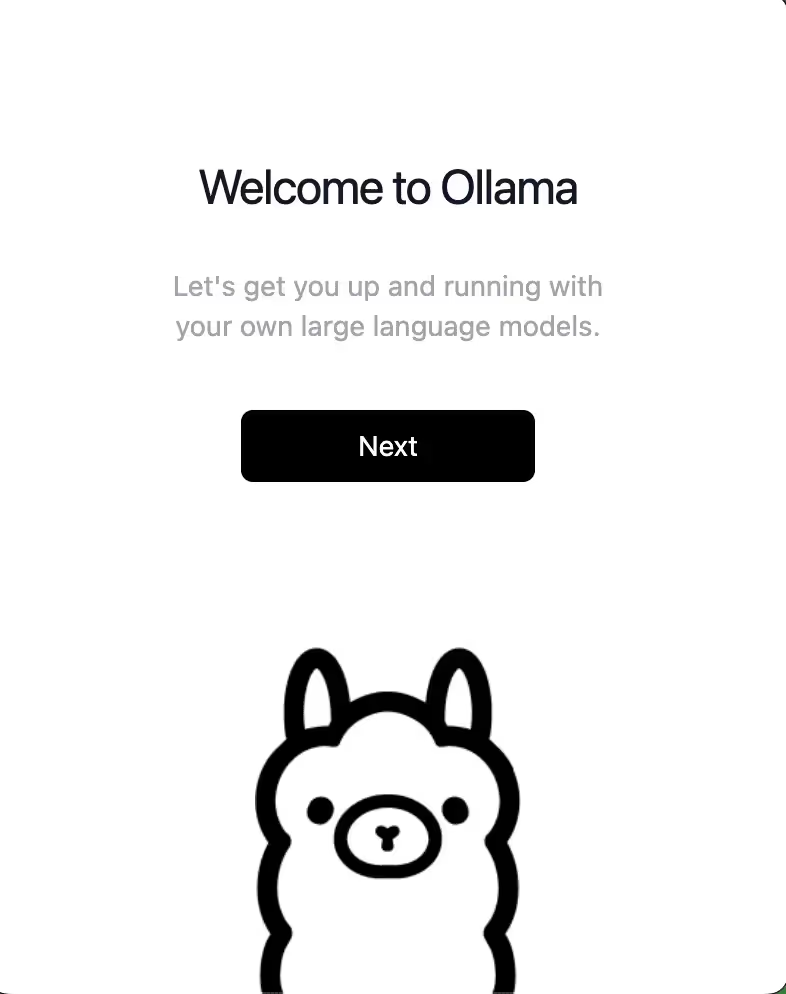

Step 2: Install Ollama on Your Mac

Once the download is complete, follow these steps to install Ollama:

1. Locate the downloaded installer

Go to your Downloads folder (or the location you saved the installer) and find the .dmg file.

2. Open the installer

Double-click the .dmg file to mount it. A window will pop up displaying the Ollama icon.

3. Drag the Ollama icon into the Applications folder

Drag the Ollama icon to your Applications folder. This installs Ollama on your Mac.

4. Launch Ollama

After installation, go to the Applications folder and double-click the Ollama icon to open it. You can also search for it using Spotlight.

Step 3: Download the DeepSeek Model

Now that Ollama is installed, you can download the DeepSeek model:

1. Open the Terminal

Use Spotlight to search for and open the Terminal app on your Mac.

2. Download the DeepSeek model

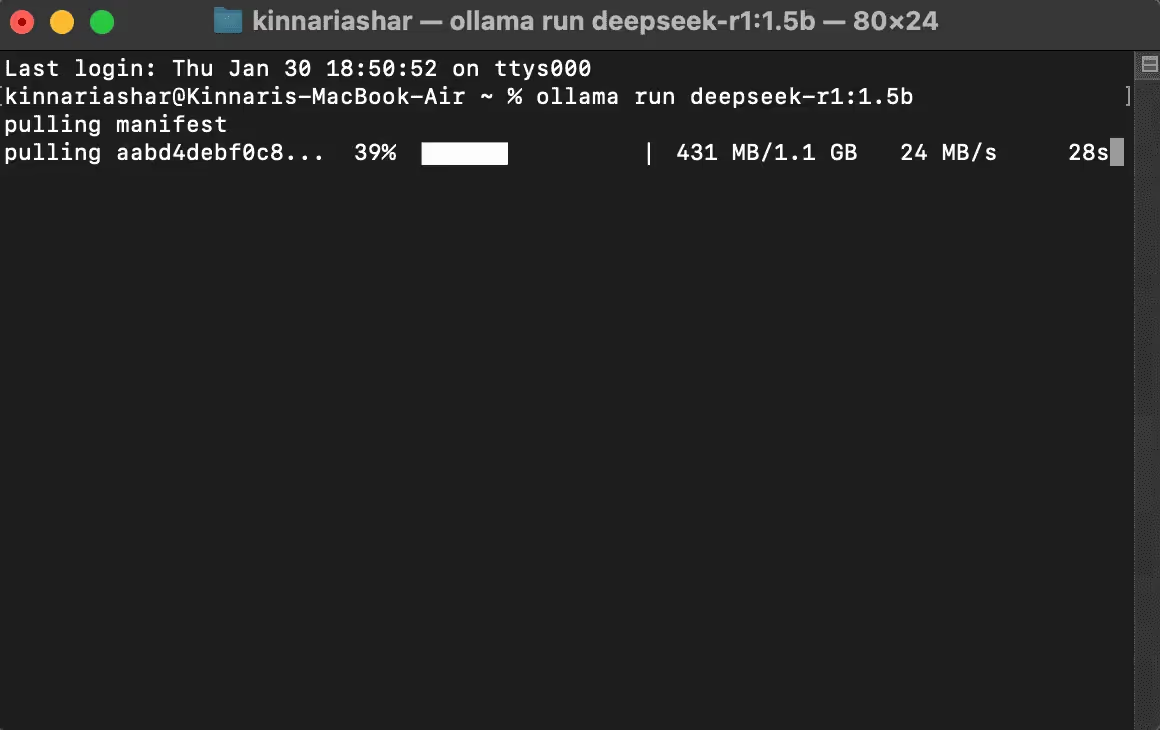

In the Terminal, type the following command to download the DeepSeek model:ollama run deepseek-r1:1.5b

This will start downloading the model. Depending on your internet speed, the download may take a few minutes.

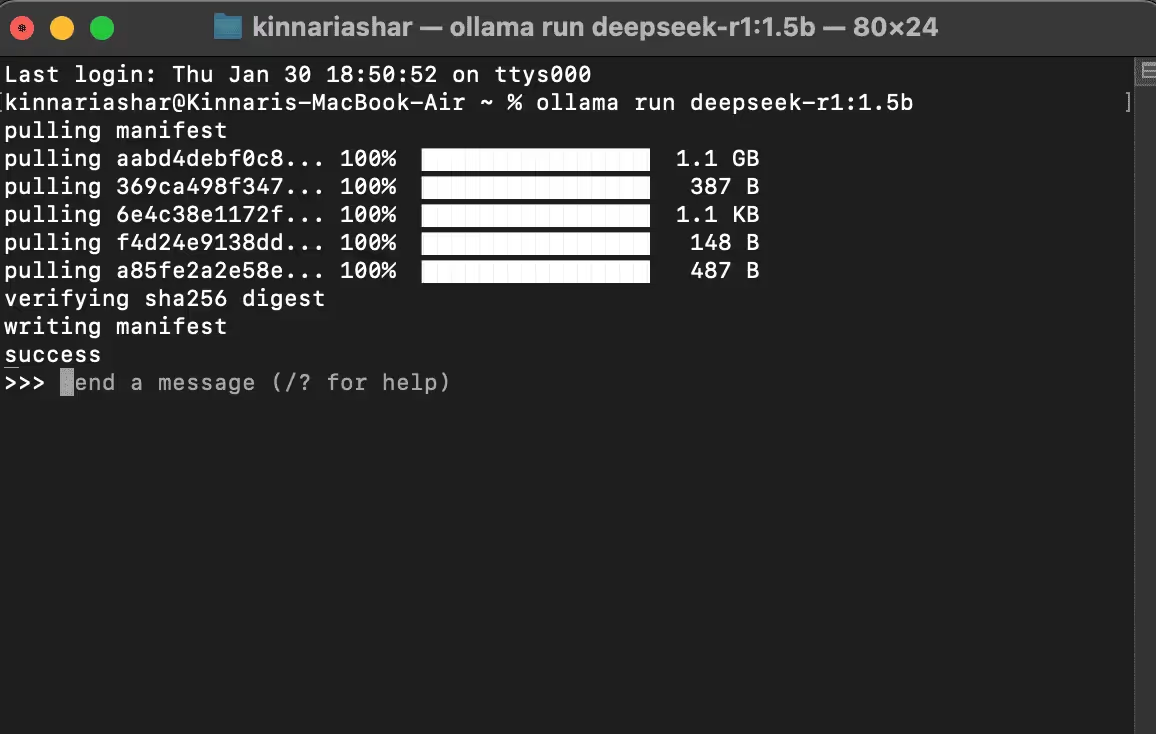

Step 4: Run DeepSeek

Once the model is downloaded, you can start using DeepSeek:

1. Run the model

In the Terminal, type the following command to initiate DeepSeek:ollama run deepseek-r1:1.5b

This command will launch DeepSeek and start processing your inputs. You will see the model load and initialize in the Terminal.

2. Start interacting with the model

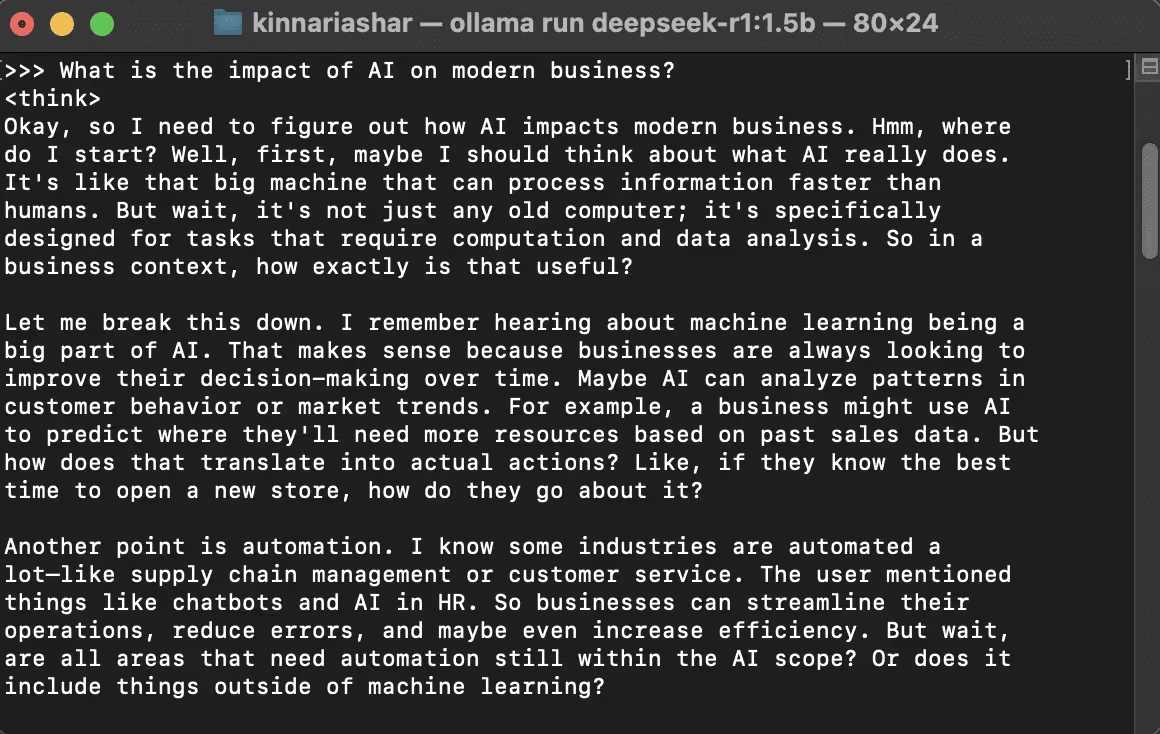

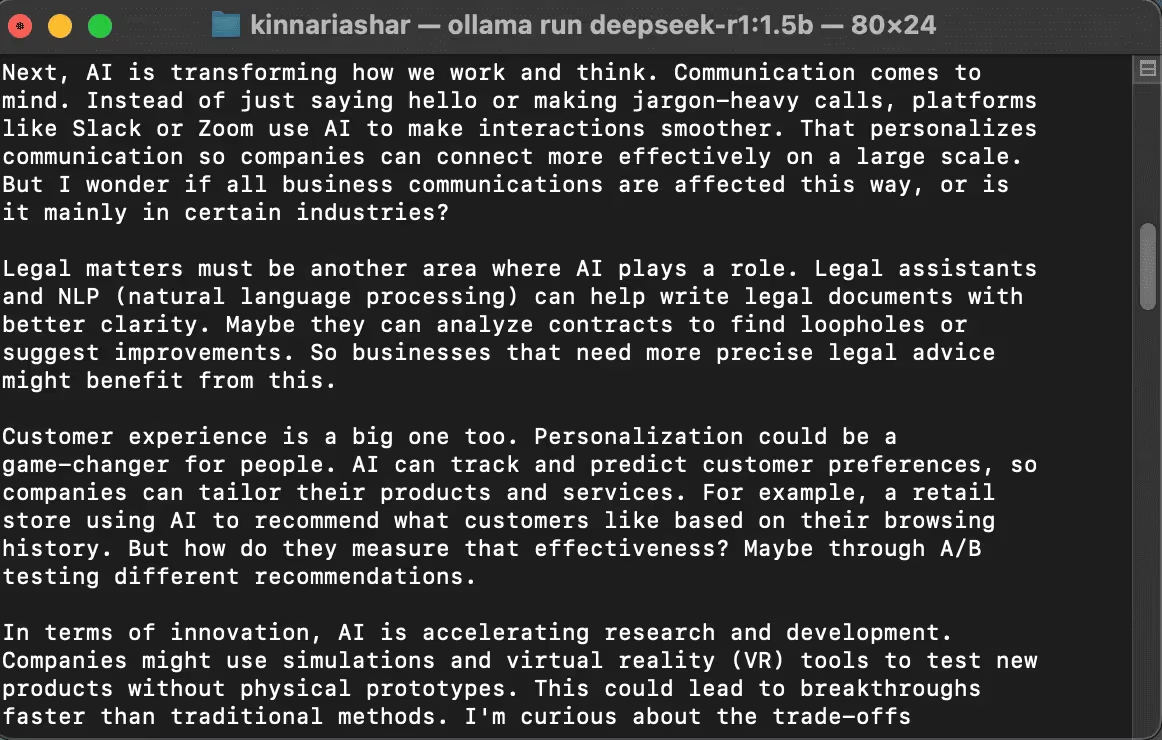

Once the model is running, you can enter prompts directly into the Terminal. For example, I typed:

What is the impact of AI on modern business?

Press Enter, and DeepSeek will generate a response based on your input. You can continue entering prompts to interact with the model in real time.

Interacting with the Model via ChatBox AI on Mac

If you prefer a more visual and user-friendly experience, using a chatbox interface is an excellent way to interact with the DeepSeek model on your Mac. Here’s how to set it up:

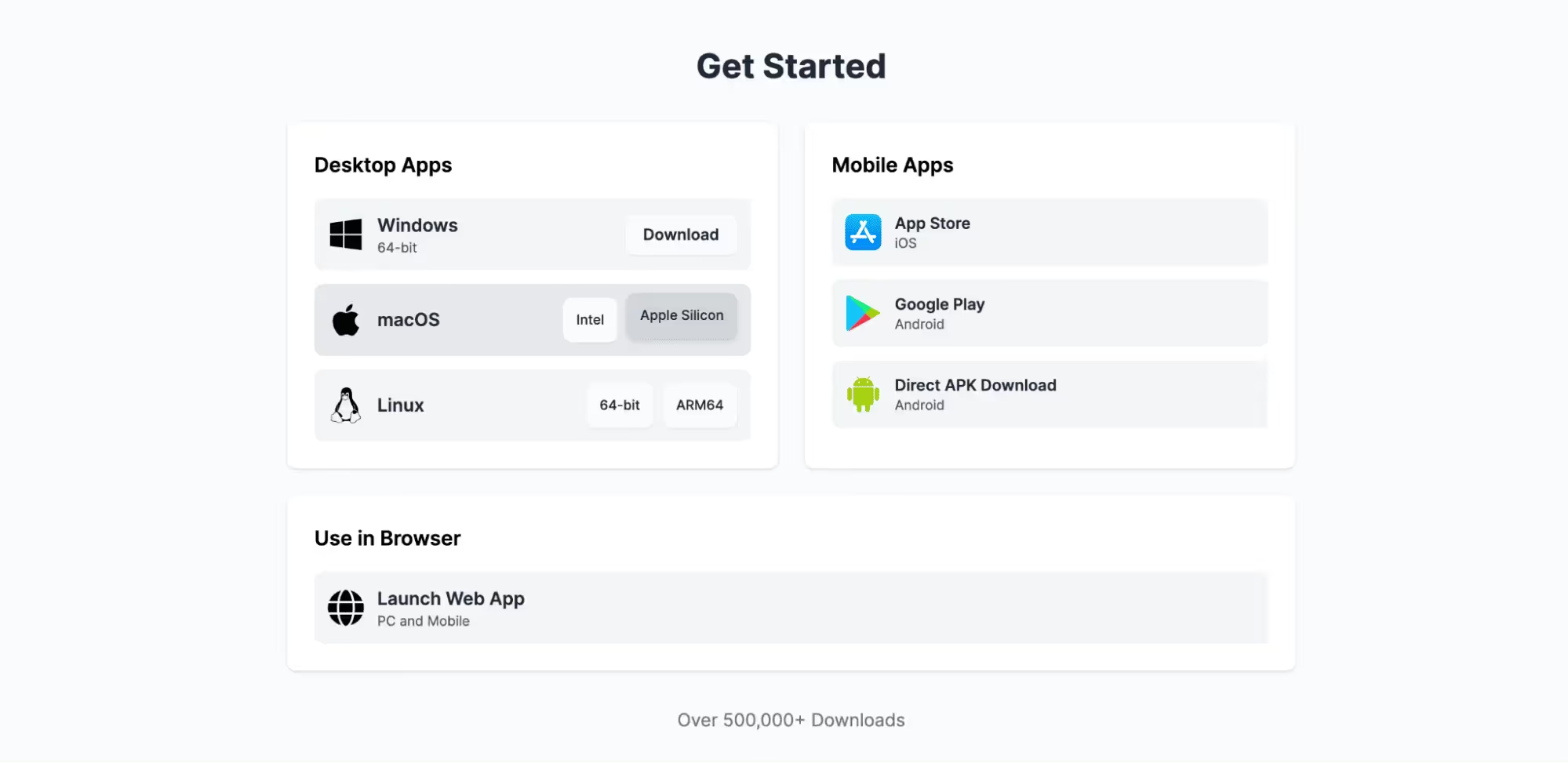

Step 1: Download ChatBox AI

- Visit the ChatBox AI official website.

- Click on the Download for Mac button.

- The installer will automatically download to your Downloads folder.

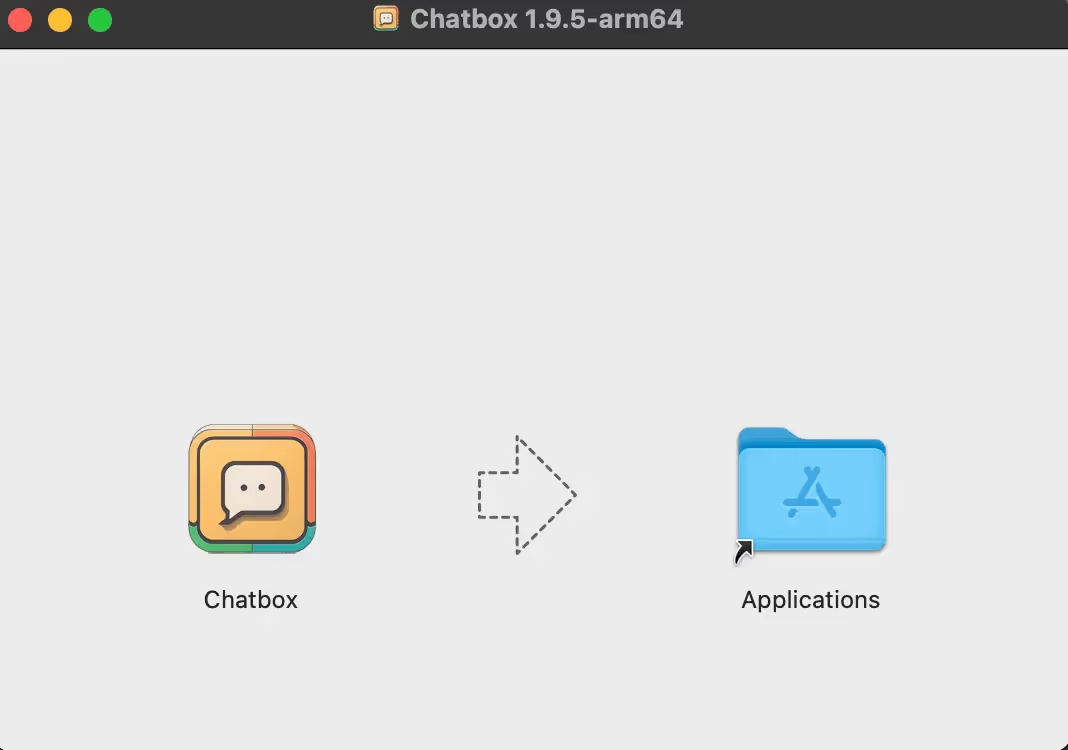

Step 2: Install ChatBox AI

- Once the download is complete, locate the installer file in your Downloads folder.

- Double-click the .dmg file to mount it. A window will pop up showing the ChatBox AI icon.

- Drag the ChatBox AI icon into your Applications folder to install it.

- After installation, open ChatBox AI from your Applications folder.

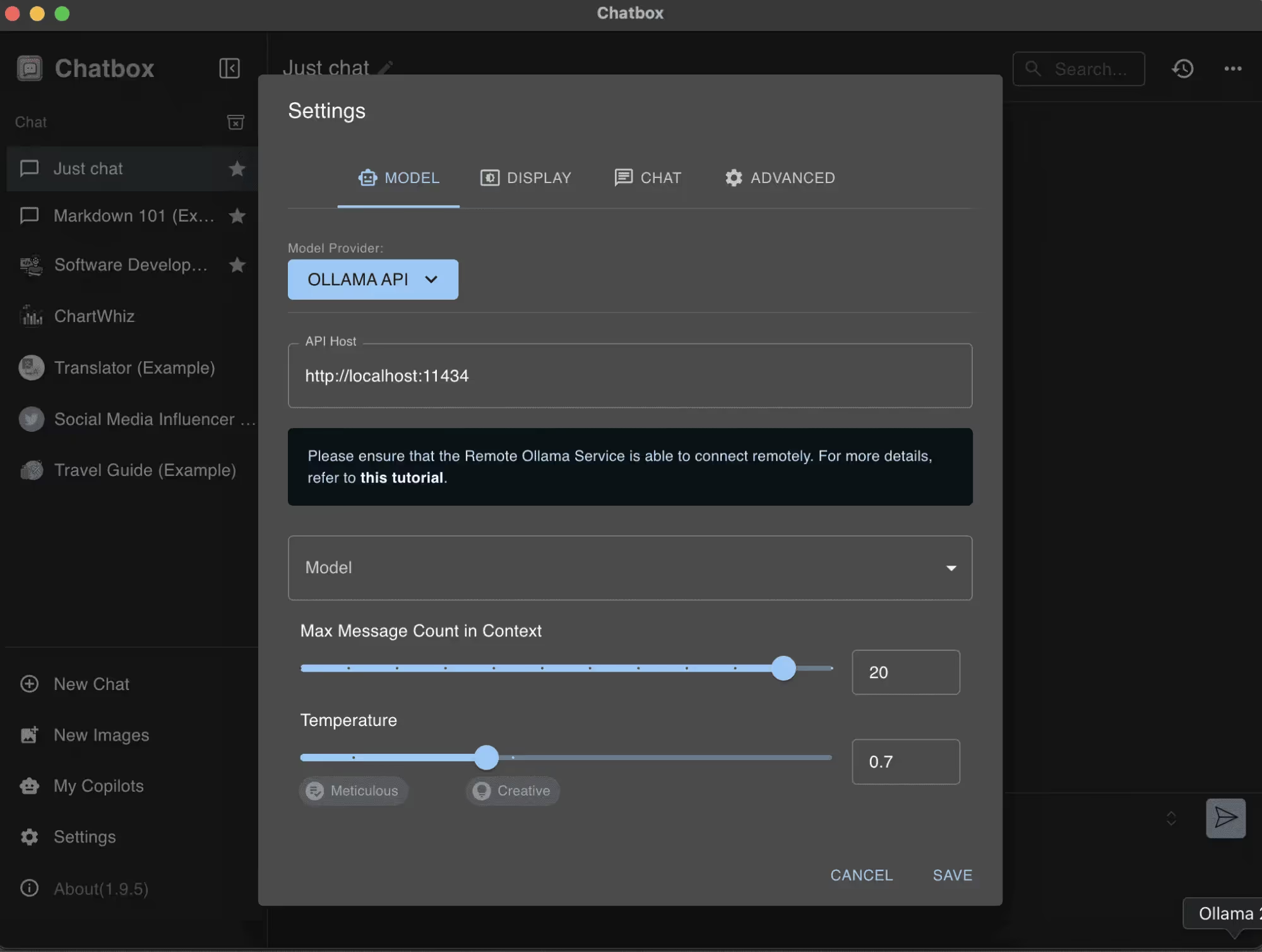

Step 3: Loading the Model

- Open ChatBox AI, then click the “Use Local Model Option” button.

- A list of available AI models will appear. Select DeepSeek from the list.

- In the Model Provider section, choose OLLAMA API, as it’s installed locally on your machine.

- Click Save to confirm the selection.

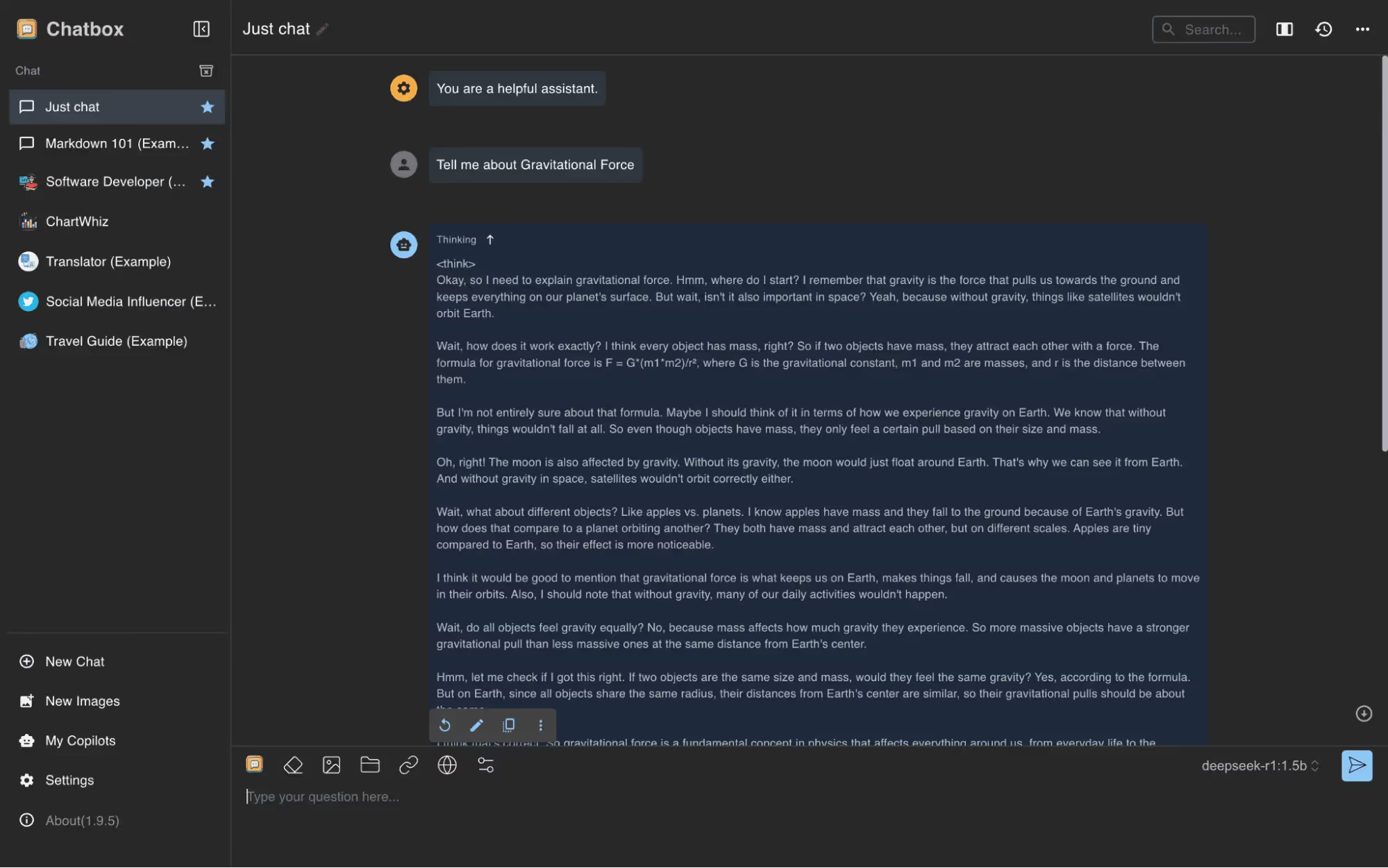

Step 4: Interacting with the Model

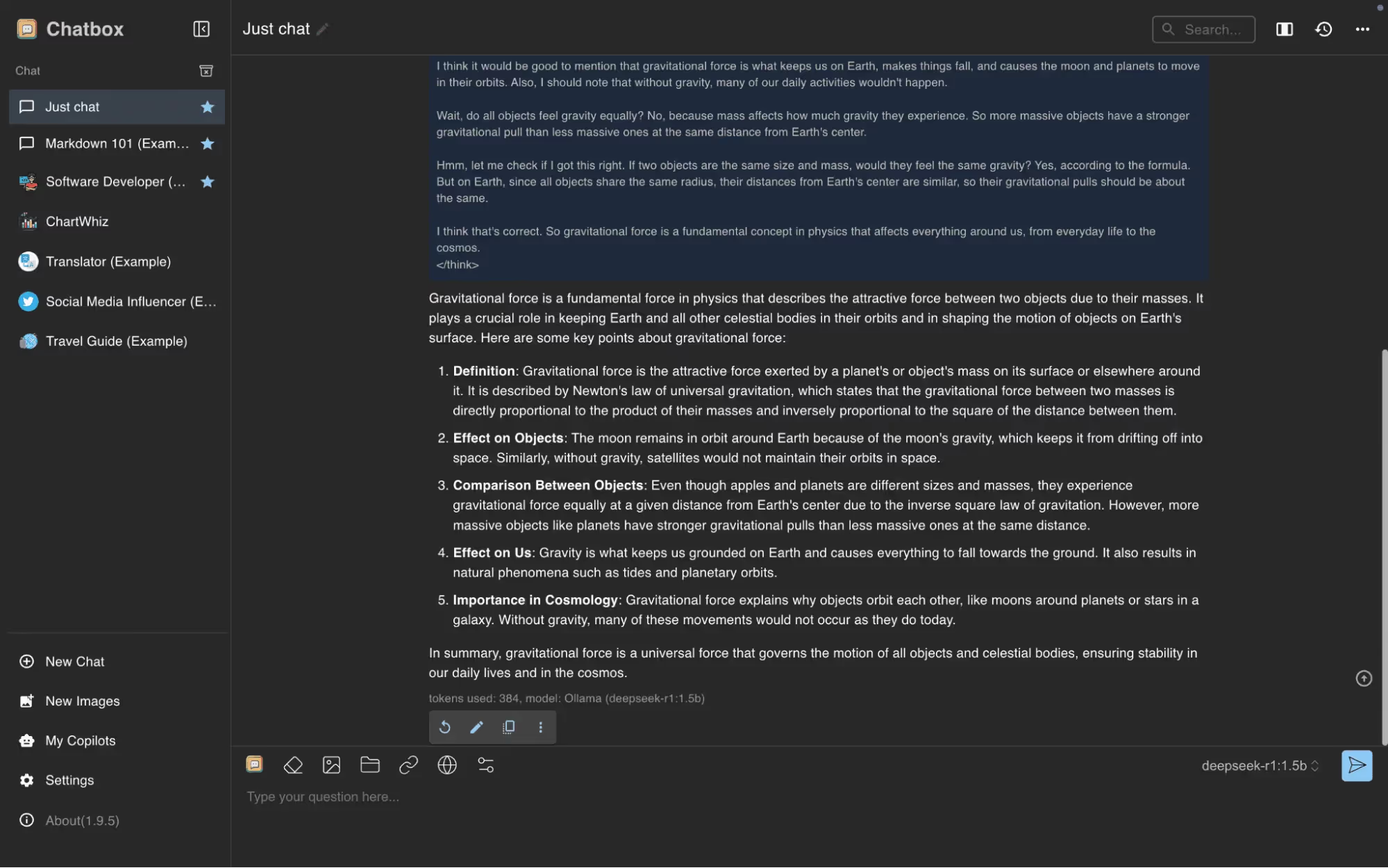

- After loading the DeepSeek model, a ChatBox interface will appear where you can input your prompts.

- Type a prompt; for example, I typed, “Tell me about Gravitational Force,” and hit Enter. DeepSeek will generate a response.

- Continue entering prompts to get real-time responses from DeepSeek.

Using ChatBox AI with DeepSeek on your Mac is an excellent choice for a visually engaging AI experience. It’s simple to set up and lets you interact with DeepSeek in a conversational manner, making it ideal for users who prefer a graphical interface. The setup is straightforward, and with ChatBox AI, you can easily get answers, explore new ideas, or learn more about various topics in real time.

Optimizing DeepSeek Performance on Mac

Once DeepSeek is up and running on your Mac, you may want to optimize its performance, especially if you’re working with large models or running complex tasks. Here are several tips and techniques you can implement to ensure that DeepSeek runs efficiently and smoothly on your system.

1. Allocate More Memory

DeepSeek models can be memory-intensive, especially when working with large datasets or performing complex tasks. Allocating more memory allows the model to process data faster, which can significantly improve performance. If your Mac has a lot of RAM (16GB or more), increasing the memory allocation for DeepSeek can help the model run more smoothly, reducing lag and improving response times. If your system has limited memory, you may need to adjust the allocation to avoid system slowdowns.

2. Use a Smaller Model for Faster Processing

DeepSeek comes in different sizes, and while larger models provide more powerful outputs, they also consume more resources. Running a smaller model can improve performance if your Mac is struggling with larger versions. Smaller models require less memory and processing power, which means they can run faster and more efficiently on machines with limited resources. You can easily switch to a smaller version of DeepSeek depending on your system's specifications.

3. Close Unnecessary Applications

Running multiple applications simultaneously can drain system resources like CPU and memory, which can affect DeepSeek’s performance. By closing unnecessary apps, you can free up resources to ensure that DeepSeek runs at its best. Use the Activity Monitor to identify apps that consume high amounts of CPU or memory and close them to improve DeepSeek’s performance. This is a simple but effective way to keep your system optimized.

4. Keep macOS Up-to-Date

Performance issues can often arise from outdated software. Ensuring that your macOS is up-to-date will not only improve the overall performance of your system but also ensure compatibility with DeepSeek.macOS updates often contain performance enhancements, bug fixes, and new features that can make running DeepSeek more efficient. Check for updates regularly by navigating to System Preferences > Software Update.

5. Use a Solid-State Drive (SSD)

If your Mac is still running on a traditional hard drive (HDD), upgrading to an SSD can significantly boost performance. SSDs offer much faster read and write speeds, which can help DeepSeek load and process data more quickly. If you're working with large models, an SSD will help reduce latency and improve response times, making your overall DeepSeek experience much smoother.

Troubleshooting Common Issues with DeepSeek on Mac

Even with proper installation and optimization, you might encounter issues while running DeepSeek locally on your Mac. In this section, we’ll address some common problems and provide solutions to help you troubleshoot effectively.

1. DeepSeek Model Won’t Download

If you are unable to download the DeepSeek model, it could be due to issues with your internet connection or insufficient disk space.Possible Solutions:

- Check Your Internet Connection: Ensure your internet connection is stable and has enough bandwidth to download large files.

- Re-run the Download: If the download was interrupted, try running the download command again. Make sure there are no interruptions in the connection.

- Verify Disk Space: Ensure your Mac has enough storage space for the DeepSeek model. If your disk is full, the download will fail.

If the problem persists, restart your Mac and try downloading the model again.

2. DeepSeek Runs Slowly or Crashes

If DeepSeek is running slowly or crashing, it could be due to insufficient system resources or an improperly configured setup.

Possible Solutions:

- Allocate More Memory: If you have enough RAM on your Mac, try allocating more memory to DeepSeek to enhance processing speed.

- Close Unnecessary Apps: Running too many applications can consume valuable system resources. Close any apps you’re not actively using to free up memory for DeepSeek.

- Reduce Model Size: If you’re using a large version of DeepSeek, try switching to a smaller model that requires less memory and CPU.

If DeepSeek continues to crash, ensure macOS and any relevant software dependencies are up to date, such as Python or Homebrew.

3. Command Line Issues with Ollama

If you encounter problems when using the Terminal with Ollama, it could be due to missing dependencies or incorrect installation.Possible Solutions:

- Verify Installation: Check if Ollama is installed correctly by running the command

ollama --version. If it’s not recognized, reinstall Ollama. - Check for Dependencies: Ensure Python and Homebrew are properly installed and updated, as Ollama depends on these tools to function.

- Reinstall Ollama: If the issue persists, try reinstalling Ollama using the command brew

reinstall ollamato ensure you have the latest version.

4. Slow Performance or Latency

Even after everything is set up, you might experience slow performance or latency when running DeepSeek, especially with larger models.Possible Solutions:

- Optimize System Settings: Ensure that your system is optimized by closing unnecessary background apps and checking for macOS updates.

- Run a Smaller Model: Switch to a smaller version of DeepSeek if you experience latency, particularly on Macs with limited memory or lower processing power.

- Monitor System Resources: Use Activity Monitor to check CPU and memory usage. If DeepSeek is using too many resources, adjust the model size or batch size for better performance.

5. Ollama Command Line Issues or Errors

If you encounter errors or issues when running Ollama from the command line, it could be due to missing dependencies, outdated software, or configuration problems.

Possible Solutions:

- Verify Installation: Ensure that Ollama is properly installed by running ollama --version in the Terminal. If it's not recognized, reinstall Ollama to ensure a clean installation.

- Check Dependencies: Ollama depends on Python and Homebrew. Ensure these tools are correctly installed and up-to-date on your Mac.

- Reinstall Ollama: If errors persist, try reinstalling Ollama. Use the command brew reinstall ollama to ensure the latest version is installed and that all dependencies are properly configured.

Conclusion

Running DeepSeek locally on your Mac provides a powerful, private, and cost-effective way to leverage AI capabilities without depending on cloud-based services. By following the steps outlined in this guide, you can easily set up DeepSeek using Ollama, optimize its performance for your system, and troubleshoot common issues that may arise.

Whether you're using Ollama for a command-line approach or prefer to interact with the model directly, DeepSeek offers flexibility to suit your needs. With the right optimizations and configurations, you'll experience faster responses, greater privacy, and full control over your AI interactions, all from the comfort of your own machine.

Now that you have all the tools and information, you're ready to dive deeper into DeepSeek and start exploring its full potential.

FAQs About Running Deepseek Models Locally on Mac

What are the system requirements for running DeepSeek locally on Mac?

You'll need macOS 10.15 or later, at least 8GB of RAM (16GB recommended), 10GB of free storage, and a multi-core Intel or Apple Silicon processor for optimal performance.

Can I run DeepSeek offline once the model is downloaded?

Yes, after downloading the DeepSeek model, you can run it offline. An internet connection is only required for the initial download of the model.

Which method is better for running DeepSeek: Ollama or another method?

If you're comfortable with the command line and prefer speed, Ollama is a great choice. It's simple and effective for quick setup. However, if you're looking for a more visual approach with a GUI, Chatbox AI is ideal.

How can I optimize DeepSeek’s performance on my Mac?

To optimize DeepSeek, allocate more memory, reduce the batch size for faster processing, use a smaller model version, and ensure your macOS is up to date. Additionally, close unnecessary applications to free up system resources.

What should I do if DeepSeek runs slowly or crashes?

If DeepSeek is running slowly or crashing, consider allocating more memory, reducing the model size, or closing other applications. Check for software updates for both macOS and DeepSeek to resolve potential compatibility issues.

%20(600%20x%20400%20px)_20251231_205555_0000.avif)